So, you’ve decided to enter the world of data analytics to promote your new product. Or perhaps your latest grant proposal is centered on an evidence-based approach, rooted deep in data. Maybe you’re just interested in leveraging internal markers of success to make your company better. These are all great reasons to collect and use data to guide your decision making.

Data-driven decisions, when properly framed and thoughtfully applied, can give your organization or project an undeniable edge. Whether you are conducting research or need data for business, accurately gathering and analyzing data are not always as simple as it sounds.

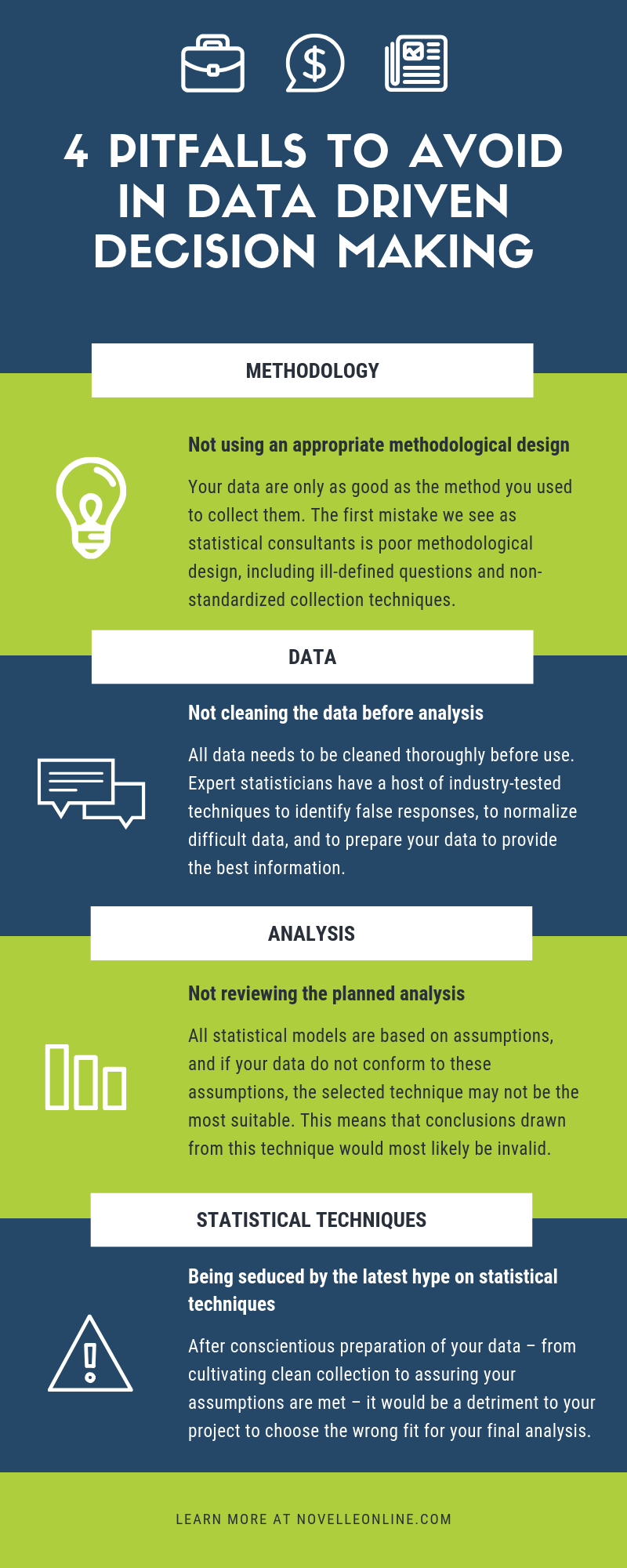

Here are the top four pitfalls to avoid when using data for decision making:

1) Not using an appropriate methodological design

First and foremost, your data are only as good as the method you used to collect them. The first, and biggest mistake we see as statistical consultants is poor methodological design, including ill-defined questions and non-standardized collection techniques. Once you’ve identified what you want to know, you must pause and ask yourself again, “What do I really want to know?” Take the time to operationalize your variables – for example, “How will I measure success?” and “What metric, specifically, should I use?” Once you’ve broken the question down into its essential parts, you have to decide how to collect the data. This is where quality control is critical. How will you administer that survey or data collection tool? Who will you train to manage the data archives? These steps require detailed planning and vision, and often require specialized expertise to ensure proper execution.

2) Not cleaning the data before analysis

Let’s say you used the perfect methodological design and kept impeccable control over the collection process. The resulting data will still need to be cleaned thoroughly before use. What is data cleaning and why is it necessary? Human response data are inherently messy. Here are some examples from surveys – but, the general principle applies to any research project where you want to gather new knowledge from human beings.

Individuals who answer data collection tools such as surveys, don’t always understand the questions, or maybe they are not taking the necessary time to give real answers to the questions. For online survey outlets, such as Amazon’s Mechanical Turk, or others, this can be especially true. In addition, survey bots are becoming increasingly common, filling your data banks with rote responses, unusable for analysis or drawing proper conclusions. Your data must be reviewed and cleaned of survey bots, non-compliant participants, and responses from those who were just plain confused by the directions. Expert statisticians have a host of industry-tested techniques to identify false responses, to normalize difficult data, and to prepare your data to provide the best information.

3) Not reviewing the planned analysis

Now that you have a great design, controlled collection, and the data are squeaky clean, the next step is to double-check that the data meet the requirements of your proposed statistical technique. All statistical models are based on assumptions, and if your data do not conform to these assumptions, the selected technique may not be the most suitable. This means that conclusions drawn from this technique would most likely be invalid because they were based on analyses that did not conform to the rules of use. For example, most of the data we deal with in human behavior are linearly related, and therefore we use the General Linear Model to describe relationships and uncover insights. But before we conduct any analyses, we must check the assumptions of the model– are the data normally distributed? What about the residuals? Is everything measured at the proper level? What are the variances? To ensure the analysis is really telling you what you believe it is, make sure this step is strictly followed. Adherence to using the correct statistical tests for the data will set you up for success.

4) Being seduced by the latest hype on statistical techniques

Finally, choosing the right analysis can be confusing. As experienced statisticians, we always follow the Law of Parsimony or Occam’s Razor – the simplest approach is almost always the best place to start. In a world of Big Data and increasingly complex analytics, it can be tempting to opt for the “hot new” procedure, or the latest development in applied statistics. But over a decade of experience analyzing data sets big and small has taught us that a stripped down, clear-cut starting place will usually lead you to your goal along the most expeditious path. After conscientious preparation of your data – from cultivating clean collection to assuring your assumptions are met – it would be a detriment to your project to choose the wrong fit for your final analysis.

Novelle’s research experts can help you pick the right design and analysis for your experiment or research question. Whether you are a research organization needing start to finish methodological and statistical assistance or a business seeking to better understand how statistics can aid in building a better company, our team has broad and deep experience extracting answers with exacting standards. Let us consult on your next data-driven project to see the results yourself!

Rita Simmons is the founder and lead consultant of Novelle, where she provides business and research consulting to companies across a variety of industries. Dr. Simmons leverages her drive for innovation and excellence along with her extensive executive and military experience to help business owners grow their business, drive revenue, and achieve strategic goals. When you’re ready to take your business to the next level, contact Dr. Simmons at info@novelleonline.com or connect with her on LinkedIn.